We’re launching Data Generation From News, a new product to generate training data directly from real-world news.

It’s built on an approach we call “Future as Label”. Models are trained on historical news by making predictions using only information available at prediction time. Those predictions are then scored based on what actually happened after, providing supervision from real-world outcomes rather than human annotators.

The result is scalable and auditable training data generated directly from real-world sources.

While this post focuses on news, Future as Label applies to any timestamped data source where outcomes resolve over time (e.g. SEC filings, internal emails).

Learning From the Future

The core idea behind Future as Label is simple. The resolution of real-world events provides the supervision signal.

Instead of relying on annotated datasets, models make predictions using only the information available at the time of prediction. As with any real-world forecast, the outcome is uncertain beforehand but easy to verify once it has happened.

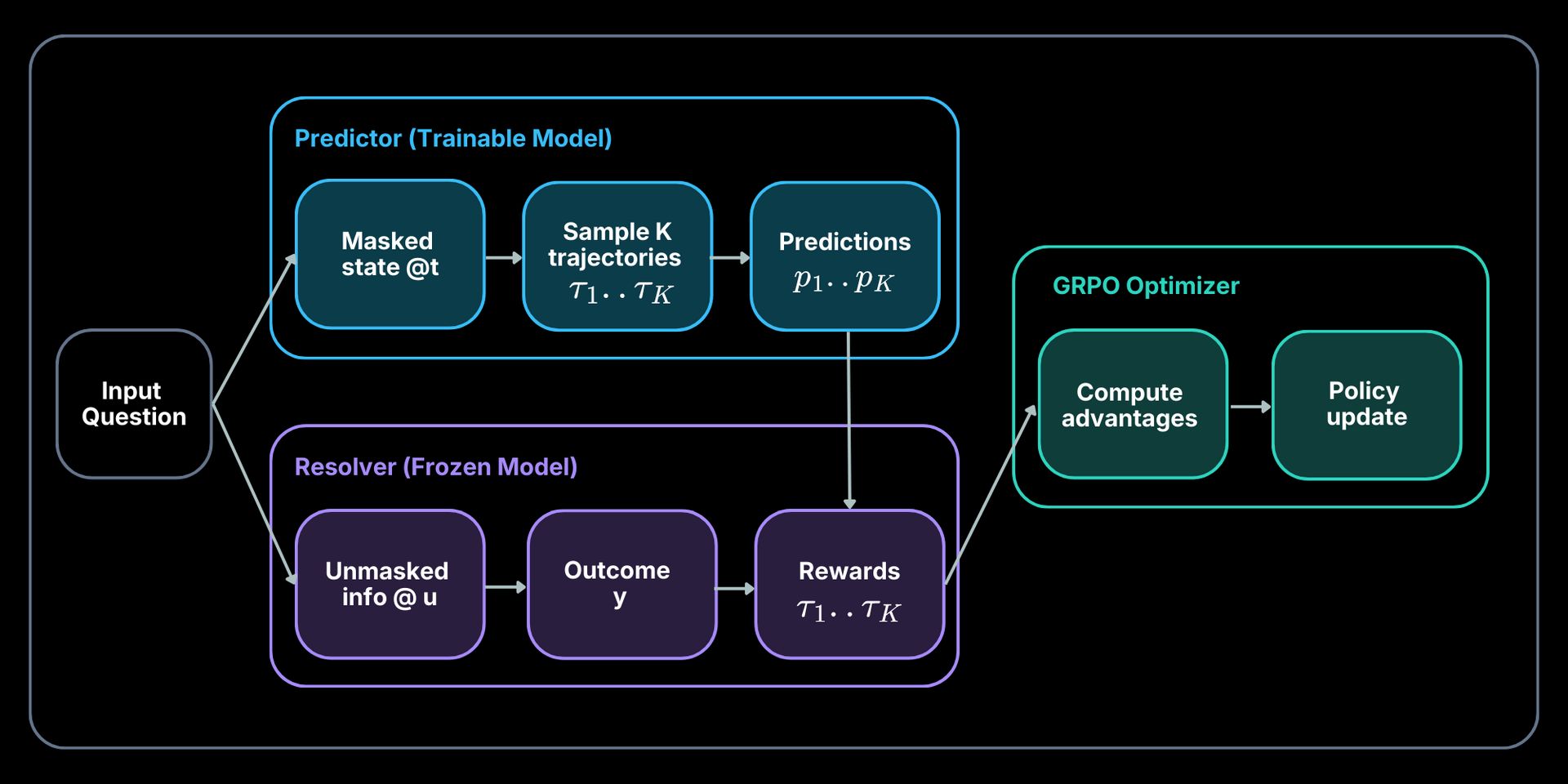

The model is explicitly restricted from seeing anything published after the prediction point, while outcome resolution and scoring happen later using information the model never observes. This creates a strict separation between prediction and supervision, as shown in the figure below.

Training Architecture for Outcome-Based Forecasting

Learning is driven entirely by realized outcomes, enabling scalable, outcome-based supervision for open world prediction tasks.

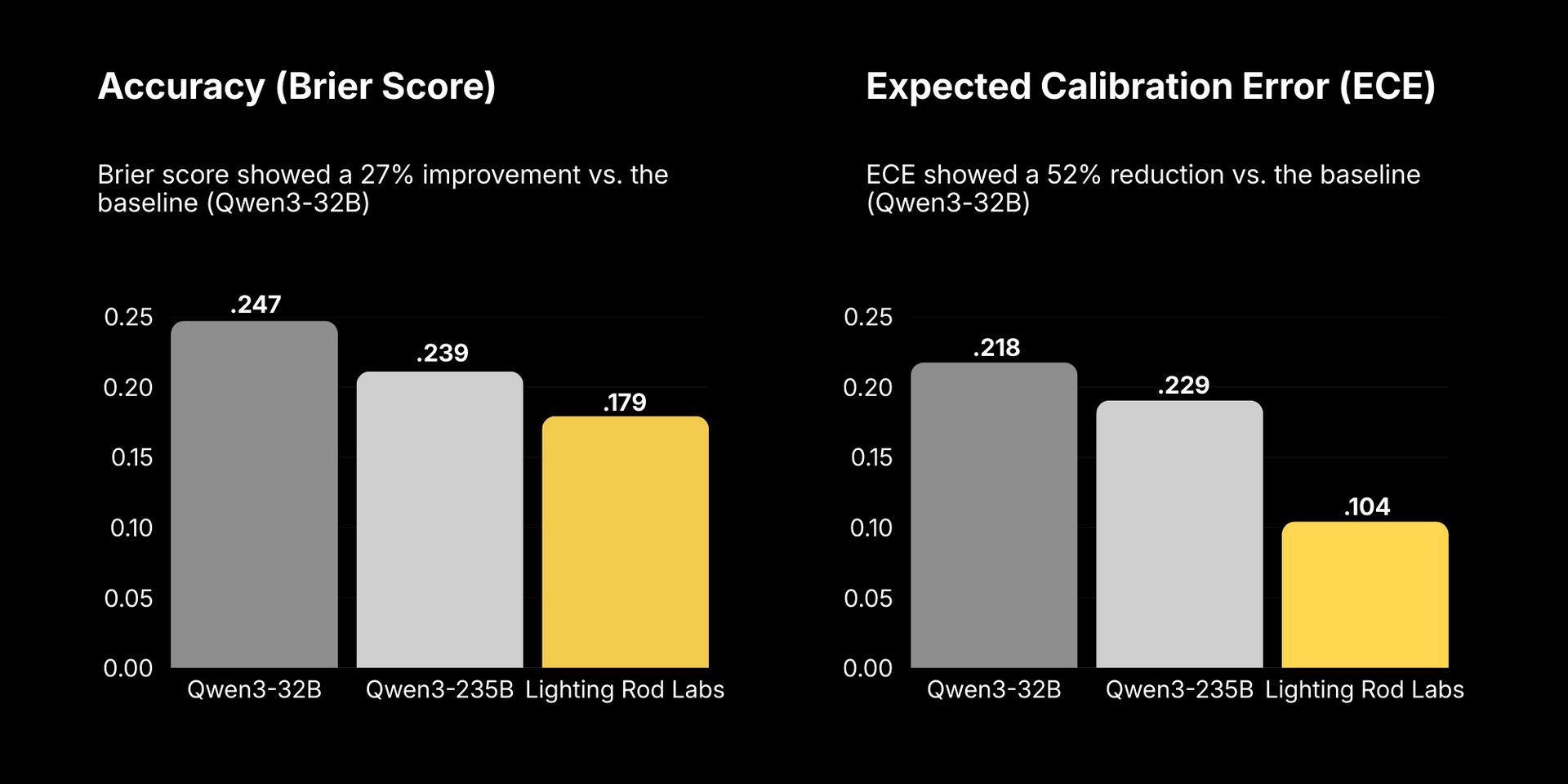

On real-world forecasting benchmarks, Qwen3-32B fine-tuned using this approach improves Brier score by 27% and roughly halves calibration error relative to its pretrained baseline. Despite being 7x smaller, it also outperforms Qwen3-235B on both constructed future event prediction tasks and the Metaculus benchmark.

Benchmarking Results

We describe this approach in detail in our paper, Future as Label: Scalable Supervision from Real-World Outcomes.

Turning News Into Training Data

Data Generation From News applies Future as Label to a large, timestamped news corpus to produce on-demand training datasets.

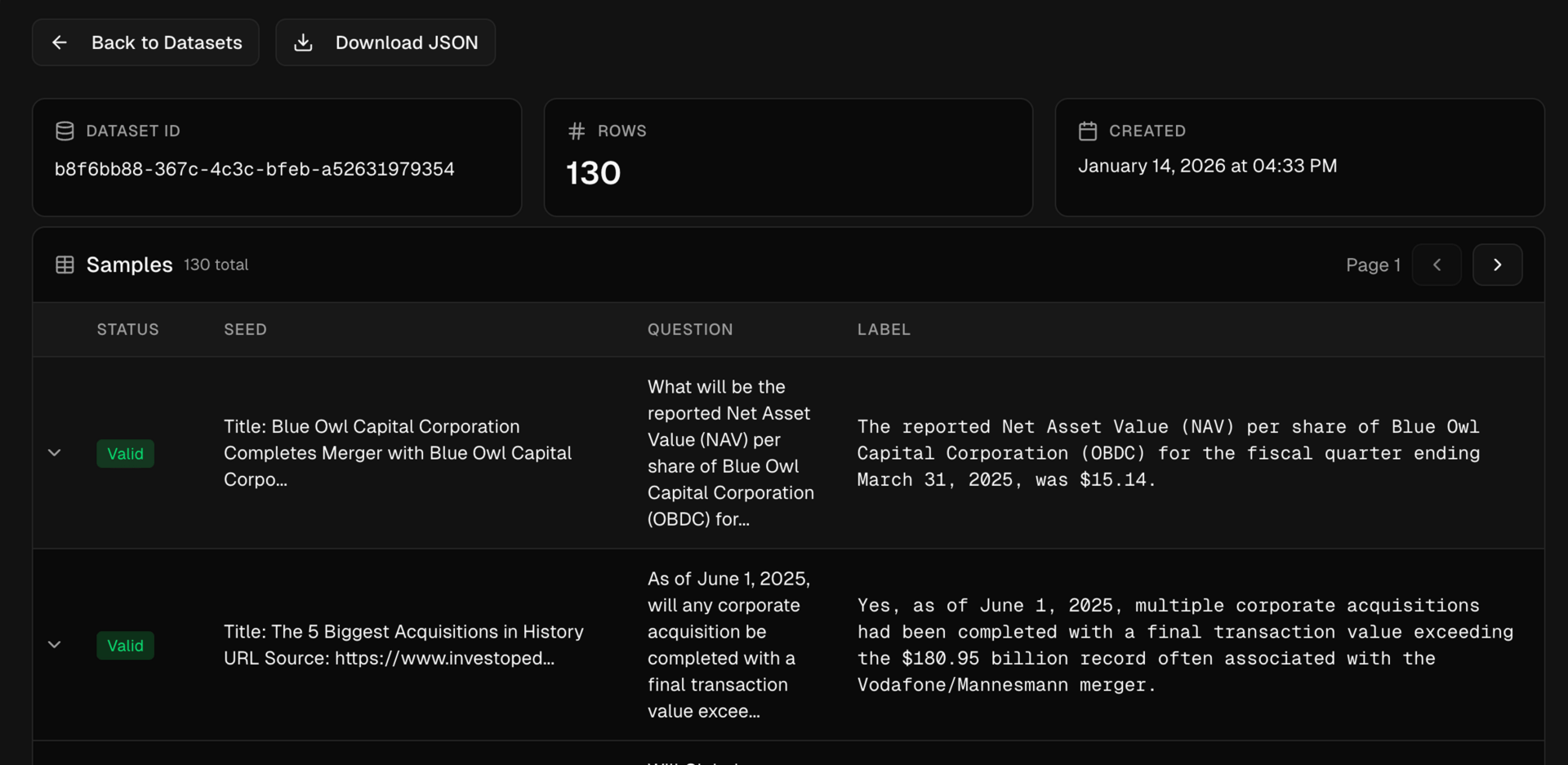

You specify your criteria (e.g. topics, timeframe, question type, and more), and the system generates a downloadable dataset. A sample output is available as a public dataset on Hugging Face.

The system automatically constructs future facing event questions grounded in the news, for example:

As of June 1, 2025, will any corporate acquisition surpass the $180.95 billion record held by the Vodafone Mannesmann merger?

What will be the total global M&A volume for the first half of 2025, defined as January 1 to June 30, 2025, according to Reuters or Dealogic?

For each question, we fix a cutoff time and construct the model input using only articles published before that point. Information published after the cutoff is excluded by construction, ensuring that the input reflects exactly what would have been known at prediction time.

Outcomes are resolved later using sources published after the cutoff. If an outcome cannot be resolved with high confidence, the example is dropped. This ensures that retained examples reflect a realistic prediction setting, without retroactive labeling or future information leakage.

Each example is stored as a single, auditable data record tied to a specific cutoff and set of sources. You can inspect exactly what information was available when a prediction was made and how the outcome was determined.

Data Generation From News Example Output

Applying Future as Label to Prediction Tasks

In practice this approach is well suited for use cases such as:

Domain specific models, using legal filings, case histories, or insurance records to predict outcomes such as court decisions, claim resolutions, or settlement likelihoods.

Risk and compliance monitoring, using SEC filings and regulatory updates to predict downstream events like enforcement actions, amendments, or regulatory approvals.

Scientific forecasting, using experimental or observational data to predict whether an intervention will produce a specific outcome.

This training pattern applies to any domain with timestamped inputs and objectively resolvable outcomes. The same approach can be used to fine-tune models on any domain where better prediction performance requires domain specific expertise.

What’s Next

We are expanding Data Generation From News to additional timestamped sources, starting with SEC filings and clinical trial reports.

If you want to explore the system directly, you can try Data Generation From News here.

We are working on a Lightning Rod SDK that lets you generate, transform, and verify datasets in just a few lines of code. The goal is to give full control over how datasets are constructed, while keeping everything inside your existing Python environment.